I decided to migrate the blog to Github Pages.

Blogger's web editing interface is a pain, tweaking your blog's theme is a nightmare, and I really don't like being on a Google-hosted platform.

Github Pages, on the other hand, offers super easy styling and customization with Jekyll, and takes Markdown files, meaning you can write posts in whatever editor you want. They seem to be a great host, too.

The new home of Sohliloquies is: https://wootfish.github.io/sohliloquies/

Sohliloquies

Thursday, April 19, 2018

Thursday, December 14, 2017

Net Neutrality and the Theseus DHT

The design for Theseus, as it stands, consists of a distributed hash table (DHT), a torrent client, a distributed search algorithm, and a web-based UI. Until now, I'd been developing everything as one monolithic entity. The purpose of this blog post is to make two announcements: first, that I'm going to be breaking out the Theseus DHT into a stand-alone software package; second, that I'm setting a hard release date for this package: New Year's Eve.

I'd also like to talk a little about the motivations behind this decision.

The result of breaking out the DHT like this is that anyone who needs a robust, secure distributed hash table can just plug this one into their project and be good to go. And, of course, it'll come with full documentation, so anyone wanting to develop their own libraries will have all the resources they need to do so.

The network's ability to detect and mitigate Sybil attacks increases as the network itself grows, which is another reason for opening up the Theseus DHT as a stand-alone entity. I want people to use this, because the more people we have using it, the stronger it gets. Everyone wins.

I'd also like to talk a little about the motivations behind this decision.

The result of breaking out the DHT like this is that anyone who needs a robust, secure distributed hash table can just plug this one into their project and be good to go. And, of course, it'll come with full documentation, so anyone wanting to develop their own libraries will have all the resources they need to do so.

This is exciting and a little bit scary. After all, the DHT library isn't actually done yet. But deadlines are among the strongest motivating forces I know, so it makes sense to put this project on one.

There are some technical reasons why I'm making the DHT a free-standing component, and there are some political reasons as well.

I'm writing this on the eve of the FCC's net neutrality vote. I fully expect them to vote to repeal what little protections we have in place. This is a deeply immoral act, and one which will have far-reaching consequences. It falls to those of us who see the potential of a free, open internet, who recognize the internet's cultural and educational power, to do everything we can to resist.

Virtually every step in the internet's evolution has been unexpected, and every step of the way the companies responsible for its infrastructure have proven their inability to understand the new technologies that're keeping them in business. The first major competitors to AT&T's deep-sea cable monopoly didn't even realize they were building internet infrastructure -- even in the late 90s, they still thought they'd be making most of their money off phone calls. Unbeknownst to them, of course, society had other plans.

So it was then, and so it is now. ISPs are trying to claim control of the internet, essentially claiming that because they own the cables you send data across to access web sites, they should get to decide which sites you go on and how slow those sites will load. That's about as fair as a car dealership trying to say where you can or can't drive -- and remotely disabling your car if you refuse to listen.

The response to this move has been fast and clear. The public is virtually unanimous in opposing the loss of net neutrality: calling our elected officials, submitting pro-net-neutralty public comments to the FCC, leveraging analytics to reveal many anti-net-neutrality comments as faked, raising awareness through social media, writing articles -- an amazing show of effort and unity, and one which will almost certainly be for nothing, because the FCC has been bought.

What comes next? Defense.

There are ways to circumvent this exploitative behavior. Using a VPN, using Tor, or getting your connection from a local ISP that has explicitly committed to respecting your rights -- any of these can help, though none are perfect solutions.

In the end, what this comes down to is a design failure. There is no need for the carrier of network traffic to know what sort of traffic they are carrying, and yet many of the protocols the Internet is built on readily give up this data -- usually because their authors didn't know any better. Not that I'm criticizing them -- after all, even just ten years ago the internet was a very different place.

We don't want to leave those days behind, but it's time to face facts, and the fact is that modern protocols need to be designed with the full awareness that the network is under constant surveillance. This means we need strong encryption, strong authentication wherever possible, and strong resistance to fingerprinting.

After all, they can't selectively throttle your traffic if all your traffic looks the same.

We don't want to leave those days behind, but it's time to face facts, and the fact is that modern protocols need to be designed with the full awareness that the network is under constant surveillance. This means we need strong encryption, strong authentication wherever possible, and strong resistance to fingerprinting.

After all, they can't selectively throttle your traffic if all your traffic looks the same.

To a passive observer, all Theseus DHT traffic is indistinguishable from random noise. Even message sizes can be made to follow arbitrary patterns or no pattern. All of this makes the protocol very hard to fingerprint. The DHT also offers resistance to man-in-the-middle attacks (assuming a trusted introduction to the network) and offers unparalleled resistance to Sybil attacks. You can read more about that here.

If other applications adopt the Theseus DHT, then a user's presence in the DHT peer swarm doesn't even reveal that they're using Theseus -- they could be using any of the apps that plug into the DHT.

If other applications adopt the Theseus DHT, then a user's presence in the DHT peer swarm doesn't even reveal that they're using Theseus -- they could be using any of the apps that plug into the DHT.

The network's ability to detect and mitigate Sybil attacks increases as the network itself grows, which is another reason for opening up the Theseus DHT as a stand-alone entity. I want people to use this, because the more people we have using it, the stronger it gets. Everyone wins.

I wish we lived in a world where these sorts of measures were unnecessary. But if they are necessary, then let's make them good as fuck.

Tuesday, September 12, 2017

Book Notes

Post-graduation, I've been finding it easier to carve out free time. Some of this I've spent on Theseus and other projects. More of it I've spent reading. It's important, I think, for technical people to retain some analog hobbies.

These are notes on some books I've read lately. Not everything -- just the stuff I've liked.

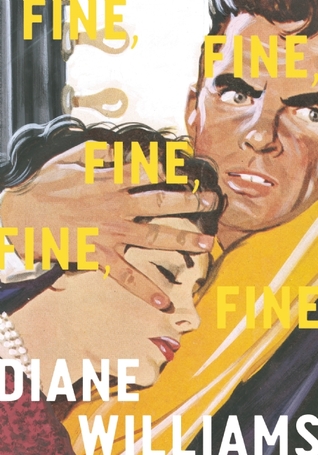

This book's stories are as striking as its cover. It's hard to summarize but easy to recommend. The stories are all really short (three pages or less), and they're put together with economy, wit, and endless inventiveness.

Fine, Fine, Fine, Fine, Fine's subject is life, sliced thin. Its stories, many of which feel more like anecdotes, capture significant moments in people's lives, rarely dramatic but always emotionally fraught.

It's a funny thing about life how small details can take on disproportionate emotional significance. So much of the texture of life comes from little things, things that stick with us even though (or maybe because?) they might not have even been noticed by anyone else. These are the details that we still remember, against all odds, years later. Trying to describe those moments is an easy way to sound silly, or obsessive, or totally out of it -- and yet Diane Williams manages to craft compelling narratives from them.

She presents these series of details, their significance to the various narrators managing to capture the essence of these people's entire lives. The character portraits thus created are uncannily vivid, the more so for their shortness. It's exciting to see an author do so much with so little.

Occasionally one hits a story so spare and inscrutable as to defy easy comprehension. That's to be expected, of course. If you feel like everything you read makes immediate sense, you're probably not pushing yourself. But in a way that'll be familiar to any fan of the avant-garde, the presence of these inscrutable elements weirdly enriches their surroundings, elevating their paradoxical-yet-sensical neighbors' standing even further. Every single story that clicks feels like a little secret treasure.

I've also got Williams' Vicky Swanky is a Beauty sitting on my to-read shelf, and after this I'm really looking forward to it.

Highlights: The Romantic Life, Living Deluxe, How Blown Up, There Is Always a Hesitation Before Turning In a Finished Job, Lamb Chops, Cod, Girl With a Pencil, A Little Bottle of Tears

This is the first of DeLillo's novels that I've read. I loved it. Having read so many authors who were admirers of his, it's kind of surreal recognizing now which of their motifs were borrowed, which of their literary tricks were drawn from this source. I'm not sure why it took me so long to start paying attention to the man behind the curtain. DeLillo's influence on his successors is immediately recognizable, as is the reason for it.

White Noise itself is so clever and nuanced that it's a bit of a struggle to resist spending the rest of this post just analyzing it. I won't put you through that. Instead, here are some things about it that really struck me.

The writing of the main character's family life is inspired. I found myself recognizing their "modern" tics to a startling degree (especially considering the book was published well before my time). Some of the characters' particular flaws and dynamics are scarily true-to-life. I wonder if they came off as exaggerated at the time.

The topic of media saturation is, by now, done-to-death several times over. Remarkably, White Noise, which predates a whole lot of the stuff on this topic, has also managed to stay fresher than most of it. It's actually a joy to read.

The perpetual struggle for later authors trying to mine this vein was to find a way to deal with fundamentally soul-crushing, oppressive topics in a non-soul-crushing manner, to evoke and represent how these characters' environment drags them down, and to leave the reader with a honest sense of the significance of this, and yet to somehow avoid dragging the reader down with them. This is a formidable task, to say the least, and one that has claimed more than a few careers. Somehow, faced with this challenge, White Noise doesn't just succeed -- it makes success look effortless. The book portrays suffocating, exhausting, nauseating, literally borderline-schizophrenic information overload in a way that actually feels somehow refreshing, reassuring, even revitalizing.

There are a few devices DeLillo uses here. The most overt one is how interspersed throughout the narrative we find strange, obtrusive interjections, total non-sequiturs with regard to what came before and what comes after, and almost always bookended by paragraph breaks. For instance:

Late in the evening a husband and wife are half-arguing in bed, and interspersed with the dialogue are sound bites from a nature show on TV in the next room over, the cloyingly benign narration undercutting the drama of their conversation in an all-too-familiar way.

Two academics walk across campus, deep in conversation, ostensibly engaged with grand ideas but also involuntarily free-associating brand names as they walk, never giving voice to these intrusive thoughts but nevertheless experiencing the private indignity.

A man gets into a car with murderous intent -- but before turning the key, he reflexively takes inventory of "trash caddies fixed to the dashboard and seat-backs, dangling plastic bags full of gum wrappers, ticket stubs, lipstick-smeared tissues, crumpled soda cans, crumpled circulars and receipts, ashtray debris ... Thus familiarized, I started the engine..."

The overall effect is to create an atmosphere that's not just devoid of drama and excitement, but one in which any that might crop up by chance is actively suppressed. This suffusion of useless information proves somehow fundamentally incompatible with any form of high drama.

These interjections (the frequency of which seems to correlate closely with the level of narrative tension) are mercifully short. They aren't the focus -- in fact, that's precisely their purpose, to not be focused on. They're just informational entropy, background radiation, nothing more than so much (dare I say it?) white noise.

My guess is that most people would call this book postmodern. Certainly it has been inspirational for a number of postmodernists. Even so, I don't think I would agree with the classification.

Like it or not, I think we can agree (and I say this from a place of love) that one of postmodernism's characteristic traits is a certain cleverer-than-thou tone, an inescapably smug quality of self-congratulation. Even when it's self-effacing, it's smug about how self-effacing it is, as if the writer sat down with the stated goal of writing the humblest-seeming passage they could, failing to realizing the irony inherent in that goal. This is a genre where an author might ask the reader to cut out a page and fold it into a Möbius strip, and no one even bothers to comment on how fucking dumb that is.

All of these qualities are conspicuously and mercifully absent in White Noise. The literary eccentricities in which DeLillo does partake feel less like self-conscious "innovations" and more like the application of well-worn techniques in a wholly new, wholly modern environment.

One could even argue the novel implicitly serves as an exploration of just what makes the modern world so draining, from whence comes its conspicuous staticness (multiple senses of the word intended here). Its use of standard techniques in creating a thoroughly nonstandard narrative forces us to ask how its world differs from those we're used to finding in our fiction. The more time we spend on this question, the more the novel drives us, through implicit prompts, to a fruitful exploration of precisely the ways in which the world has changed and continues to change, and what these changes are doing to us.

The world DeLillo depicts is one suffused by radio and television, but still pre-Internet. Yet his characterization of its psychic qualities, of its effects on ordinary people, still ring true decades later. The ends for which we use technology, and the ways we react, psychologically, to actually having those ends satisfied, have changed far less than the technologies themselves. Heinrich would have loved Wikipedia (for better or worse). Babette's obsession with call-in radio shows presages the appeal of niche interest-based social media cliques. The family's disaster-gawking would've been perfectly indulged by YouTube. And the "émigré" New Yorkers' rapid-fire and "obvious" analyses of these surreptitious interests' underpinnings still ring uncannily true. Technology changes, but people don't.

Those who've read the book will notice I haven't given a single word yet to its most overtly noteworthy plot point, the "airborne toxic event". There's not much that can be said on that topic in the presence of virgin ears. I did promise earlier to avoid spoilers. Suffice to say that I found the role this event plays in the greater plot to be inspired and perfectly in keeping with everything discussed above. And lately, as wildfires rain ash down from the Seattle sky and people walk around with air-filter masks, the idea of the Event has proven to be surreally immediate.

This is an exceptional novel, moreso because it weighs in at 300-odd pages and fits more into them than you'd usually find in a book twice its length. Recommended to anyone who found themselves intrigued by any of the above. And, if any DeLillo fans have suggestions for what I should read next from his startlingly expansive bibliography, let me know.

It goes without saying that when it comes to issues of digital privacy and security, Bruce Schneier is just about the smartest, most well-informed writer around.

For those who don't know, in '94 Schneier wrote the book on cryptography (Applied Cryptography), then in '96 he wrote the other book on cryptography (Practical Cryptography -- the first book I ever read on the subject, as it happens). He's since written, blogged, and newslettered extensively, writing primarily on security issues. The dude has long since achieved luminary security guru status. If you only pay attention to one person on security issues, that one person should be Bruce Schneier.

If, like myself, you enjoy his blog but wish the posts were longer or the essays were more frequent, do yourself a favor and pick up this book. If you want to know the secret ways your privacy is being invaded every day, this is the book for you. If the topic seems too daunting to comprehend, or if you try to comfort yourself by repeating a mantra like, "I'm not interesting enough to track, they don't care about people like me, my obscurity secures me," this book will almost certainly change your perspective. The modern techniques of surveillance render it such a low-cost activity that this "who-wants-to-bother-watching-little-ol-me" reasoning just doesn't work any more. The days of targeted surveillance are numbered; these days, it turns out to be more effective to just gather all the data you can on everyone, everywhere, all the time. The game has changed, and Schneier lucidly exposes how and why.

What's bizarre is that this whole surveillance apparatus emerged in the last few decades. It's wholly modern, and both qualitatively and quantitatively it is wholly different from anything else in human history. This means that when it comes to this stuff, some very common tasks turn out to be very hard:

I'm thinking of writing a longform post about this book, so I'll try to keep this one short. Walkaway is fantastic, and has just about the best set of back-cover endorsements I've ever seen:

"The darker the hour, the better the moment for a rigorously imagined utopian fiction. Walkaway is now the best contemporary example I know of, its utopia glimpsed after fascinatingly extrapolated revolutionary struggle. A wonderful novel: everything we've come to expect from Cory Doctorow and more."

--William Gibson

"A dystopian future is in no way inevitable; Walkaway reminds us that the world we choose to build is the one we'll inhabit."

--Edward Snowden

"In a world full of easy dystopias, Cory Doctorow writes the hard utopia, and what do you know, his utopia is both more thought-provoking and more fun."

--Kim Stanley Robinson

"Cory Doctorow has authored the Bhagavad Gita of hacker/maker/burner/open source/git/gnu/wiki/99%/adjunct faculty/Anonymous/shareware/thingiverse/cypherpunk/LGTBQIA*/squatter/upcycling culture and zipped it down into a pretty damned tight techno-thriller with a lot of sex in it."

--Neal Stephenson

For a book like this, it's hard to imagine four better people to get blurbs from, and everything they say is spot-on. This is a great book, and it cannot have been easy to write. I've always thought that if Neal Stephenson had a more overtly political bent he'd sound a lot like Cory Doctorow (the correspondence is, of course, strongly complimentary for both parties), and this novel is strong evidence for that suspicion.

Walkaway is a novel of ideas, primarily concerned with social structures: what is possible, what is practical, and what is likely. Doctorow's creativity is striking, and he pulls no punches. This novel is optimistic and idealistic but by no means starry-eyed.

One interesting thing about the reviews quoted above is how three out of four make direct reference to this being a utopian novel. My suspicion is that this was not entirely by coincidence: if I were Cory Doctorow, I'd probably be concerned about being misinterpreted. In a sense, this novel is an exploration of ways in which utopia can emerge from dystopia, and it's easy to imagine how people would miss the former in a world where so much of what we read is the latter.

The basic premise -- skirting spoilers as much as possible -- is that modern society has grown almost intolerably late-capitalist, stratified between a worker class without even a hint of upward mobility, and an upper class, the "zottas" (an unimaginably large new SI prefix, one assumes, coined to describe the magnitude of their inflation- and greed-bloated wealth). The zottas live by a different set of rules, and from their perspective society is a kind of utopia -- at least, as long as they can keep from thinking too hard about it. This comes easily to most.

Meanwhile, for the worker class, all you have is wage-slavery in a world of ultra-pervasive surveillance. Most people lead lives of quiet irrelevance, barely getting by in a world that only cares about them inasmuch as it cares about their labor. The risk-takers throw "communist parties", breaking into unused warehouses and recommissioning abandoned manufacturing equipment, letting it spit out high-quality goods and giving them away, first-come-first-serve. Free as in beer -- and the beer's free, too.

The interesting thing about having automated fabrication of this quality is that it invalidates a core axiom of capitalism: that there's only so much nice stuff, and it has to get divvied up somehow, and an unfair system is better than no system at all. People in Walkaway's "default" society still live under this assumption, but a growing number come to realize the deck is stacked against them and, well, they walk away.

In walkaway, people share their fab machines gladly as long as you're not an asshole. Feedstock for them is abundant from salvage (left over from centuries of war and climate change, one assumes). Show up with feedstock and you're everyone's new best friend. With the labor cost of producing something you've got a design for reduced to virtually nil, the idea of having long-term possessions becomes sort of quaint, and the idea of purchasing things is flat-out incoherent. Why bother?

So while the people in "default" run ever-faster on their hamster wheels, the walkaways step off, rest their legs, and take a moment to look around for the first time. The book is about what they see, and how the rest of the world reacts to what they've done. It gets really dark in some places, but there's a utopian vision throughout that I found incredibly compelling. The world we choose to build is the one we'll inhabit.

These are notes on some books I've read lately. Not everything -- just the stuff I've liked.

This book's stories are as striking as its cover. It's hard to summarize but easy to recommend. The stories are all really short (three pages or less), and they're put together with economy, wit, and endless inventiveness.

Fine, Fine, Fine, Fine, Fine's subject is life, sliced thin. Its stories, many of which feel more like anecdotes, capture significant moments in people's lives, rarely dramatic but always emotionally fraught.

It's a funny thing about life how small details can take on disproportionate emotional significance. So much of the texture of life comes from little things, things that stick with us even though (or maybe because?) they might not have even been noticed by anyone else. These are the details that we still remember, against all odds, years later. Trying to describe those moments is an easy way to sound silly, or obsessive, or totally out of it -- and yet Diane Williams manages to craft compelling narratives from them.

She presents these series of details, their significance to the various narrators managing to capture the essence of these people's entire lives. The character portraits thus created are uncannily vivid, the more so for their shortness. It's exciting to see an author do so much with so little.

Occasionally one hits a story so spare and inscrutable as to defy easy comprehension. That's to be expected, of course. If you feel like everything you read makes immediate sense, you're probably not pushing yourself. But in a way that'll be familiar to any fan of the avant-garde, the presence of these inscrutable elements weirdly enriches their surroundings, elevating their paradoxical-yet-sensical neighbors' standing even further. Every single story that clicks feels like a little secret treasure.

I've also got Williams' Vicky Swanky is a Beauty sitting on my to-read shelf, and after this I'm really looking forward to it.

Highlights: The Romantic Life, Living Deluxe, How Blown Up, There Is Always a Hesitation Before Turning In a Finished Job, Lamb Chops, Cod, Girl With a Pencil, A Little Bottle of Tears

This is the first of DeLillo's novels that I've read. I loved it. Having read so many authors who were admirers of his, it's kind of surreal recognizing now which of their motifs were borrowed, which of their literary tricks were drawn from this source. I'm not sure why it took me so long to start paying attention to the man behind the curtain. DeLillo's influence on his successors is immediately recognizable, as is the reason for it.

White Noise itself is so clever and nuanced that it's a bit of a struggle to resist spending the rest of this post just analyzing it. I won't put you through that. Instead, here are some things about it that really struck me.

The writing of the main character's family life is inspired. I found myself recognizing their "modern" tics to a startling degree (especially considering the book was published well before my time). Some of the characters' particular flaws and dynamics are scarily true-to-life. I wonder if they came off as exaggerated at the time.

The topic of media saturation is, by now, done-to-death several times over. Remarkably, White Noise, which predates a whole lot of the stuff on this topic, has also managed to stay fresher than most of it. It's actually a joy to read.

The perpetual struggle for later authors trying to mine this vein was to find a way to deal with fundamentally soul-crushing, oppressive topics in a non-soul-crushing manner, to evoke and represent how these characters' environment drags them down, and to leave the reader with a honest sense of the significance of this, and yet to somehow avoid dragging the reader down with them. This is a formidable task, to say the least, and one that has claimed more than a few careers. Somehow, faced with this challenge, White Noise doesn't just succeed -- it makes success look effortless. The book portrays suffocating, exhausting, nauseating, literally borderline-schizophrenic information overload in a way that actually feels somehow refreshing, reassuring, even revitalizing.

There are a few devices DeLillo uses here. The most overt one is how interspersed throughout the narrative we find strange, obtrusive interjections, total non-sequiturs with regard to what came before and what comes after, and almost always bookended by paragraph breaks. For instance:

Late in the evening a husband and wife are half-arguing in bed, and interspersed with the dialogue are sound bites from a nature show on TV in the next room over, the cloyingly benign narration undercutting the drama of their conversation in an all-too-familiar way.

Two academics walk across campus, deep in conversation, ostensibly engaged with grand ideas but also involuntarily free-associating brand names as they walk, never giving voice to these intrusive thoughts but nevertheless experiencing the private indignity.

A man gets into a car with murderous intent -- but before turning the key, he reflexively takes inventory of "trash caddies fixed to the dashboard and seat-backs, dangling plastic bags full of gum wrappers, ticket stubs, lipstick-smeared tissues, crumpled soda cans, crumpled circulars and receipts, ashtray debris ... Thus familiarized, I started the engine..."

The overall effect is to create an atmosphere that's not just devoid of drama and excitement, but one in which any that might crop up by chance is actively suppressed. This suffusion of useless information proves somehow fundamentally incompatible with any form of high drama.

These interjections (the frequency of which seems to correlate closely with the level of narrative tension) are mercifully short. They aren't the focus -- in fact, that's precisely their purpose, to not be focused on. They're just informational entropy, background radiation, nothing more than so much (dare I say it?) white noise.

My guess is that most people would call this book postmodern. Certainly it has been inspirational for a number of postmodernists. Even so, I don't think I would agree with the classification.

Like it or not, I think we can agree (and I say this from a place of love) that one of postmodernism's characteristic traits is a certain cleverer-than-thou tone, an inescapably smug quality of self-congratulation. Even when it's self-effacing, it's smug about how self-effacing it is, as if the writer sat down with the stated goal of writing the humblest-seeming passage they could, failing to realizing the irony inherent in that goal. This is a genre where an author might ask the reader to cut out a page and fold it into a Möbius strip, and no one even bothers to comment on how fucking dumb that is.

All of these qualities are conspicuously and mercifully absent in White Noise. The literary eccentricities in which DeLillo does partake feel less like self-conscious "innovations" and more like the application of well-worn techniques in a wholly new, wholly modern environment.

One could even argue the novel implicitly serves as an exploration of just what makes the modern world so draining, from whence comes its conspicuous staticness (multiple senses of the word intended here). Its use of standard techniques in creating a thoroughly nonstandard narrative forces us to ask how its world differs from those we're used to finding in our fiction. The more time we spend on this question, the more the novel drives us, through implicit prompts, to a fruitful exploration of precisely the ways in which the world has changed and continues to change, and what these changes are doing to us.

The world DeLillo depicts is one suffused by radio and television, but still pre-Internet. Yet his characterization of its psychic qualities, of its effects on ordinary people, still ring true decades later. The ends for which we use technology, and the ways we react, psychologically, to actually having those ends satisfied, have changed far less than the technologies themselves. Heinrich would have loved Wikipedia (for better or worse). Babette's obsession with call-in radio shows presages the appeal of niche interest-based social media cliques. The family's disaster-gawking would've been perfectly indulged by YouTube. And the "émigré" New Yorkers' rapid-fire and "obvious" analyses of these surreptitious interests' underpinnings still ring uncannily true. Technology changes, but people don't.

Those who've read the book will notice I haven't given a single word yet to its most overtly noteworthy plot point, the "airborne toxic event". There's not much that can be said on that topic in the presence of virgin ears. I did promise earlier to avoid spoilers. Suffice to say that I found the role this event plays in the greater plot to be inspired and perfectly in keeping with everything discussed above. And lately, as wildfires rain ash down from the Seattle sky and people walk around with air-filter masks, the idea of the Event has proven to be surreally immediate.

This is an exceptional novel, moreso because it weighs in at 300-odd pages and fits more into them than you'd usually find in a book twice its length. Recommended to anyone who found themselves intrigued by any of the above. And, if any DeLillo fans have suggestions for what I should read next from his startlingly expansive bibliography, let me know.

It goes without saying that when it comes to issues of digital privacy and security, Bruce Schneier is just about the smartest, most well-informed writer around.

For those who don't know, in '94 Schneier wrote the book on cryptography (Applied Cryptography), then in '96 he wrote the other book on cryptography (Practical Cryptography -- the first book I ever read on the subject, as it happens). He's since written, blogged, and newslettered extensively, writing primarily on security issues. The dude has long since achieved luminary security guru status. If you only pay attention to one person on security issues, that one person should be Bruce Schneier.

If, like myself, you enjoy his blog but wish the posts were longer or the essays were more frequent, do yourself a favor and pick up this book. If you want to know the secret ways your privacy is being invaded every day, this is the book for you. If the topic seems too daunting to comprehend, or if you try to comfort yourself by repeating a mantra like, "I'm not interesting enough to track, they don't care about people like me, my obscurity secures me," this book will almost certainly change your perspective. The modern techniques of surveillance render it such a low-cost activity that this "who-wants-to-bother-watching-little-ol-me" reasoning just doesn't work any more. The days of targeted surveillance are numbered; these days, it turns out to be more effective to just gather all the data you can on everyone, everywhere, all the time. The game has changed, and Schneier lucidly exposes how and why.

What's bizarre is that this whole surveillance apparatus emerged in the last few decades. It's wholly modern, and both qualitatively and quantitatively it is wholly different from anything else in human history. This means that when it comes to this stuff, some very common tasks turn out to be very hard:

- It's hard to level-headedly evaluate the risks, costs, and benefits that come with this apparatus, because we have no reference points, no precedent, no examples to draw from.

- It's hard to even know the full extent of what is taking place, because it's so new that there are still a lot of well-kept secrets.

- (At one point, Schneier mentions that one of the most surreal things about the Snowden leaks was how the actual facts of the NSA's operations made even the craziest civilian-sector conspiracy theories about them seem plain by contrast)

- It's hard to regulate this space, because (again) we have no proven reference points to use when drawing up new laws, and because any existing precedent we do have is woefully under-equipped to deal with the issues we currently face.

- (Schneier does actually cite a number of relevant, modern referenda and pieces of legislation from various governments and international bodies, which he suggests as good models for future legislation)

- It's hard, as a civilian, to protect yourself in "the hidden battles to collect your data and control your world" -- and it's just about impossible to withdraw from the fight completely.

- It is, however, alarmingly easy to throw up your hands and give up, to relinquish your fundamental human right to privacy, to stop trying to protect yourself or even know what all you'd be trying to protect yourself against.

These issues and more are addressed within this book's 400 pages, in impressively clear, authoritative, information-dense fashion. It's a whirlwind tour of where we are in the USA and worldwide with respect to issues of digital privacy and mass data collection, of how we got here, and of where we need to go if we want to transform our current society into one that respects the rights and wishes of its constituents. It includes actionable suggestions for governments, for corporations, and for individuals.

In the book's closing acknowledgements section, Schneier explicitly thanks Edward Snowden, "...whose courageous actions resulted in the global conversation we are now having about surveillance. It's not an exaggeration to say that I would not have written this book had he not done what he did." Data and Goliath's premise and content both confirm Snowden's early statement to Laura Poitras that at NSA, "we are building the greatest weapon for oppression in the history of man, yet its directors exempt themselves from accountability." This is true both in government and private industry: companies that gather and sell individuals' data without consent are everywhere, and none of them acknowledge the impact of what they do.

I consider myself to be someone who follows these issues pretty closely, and I still learned a ton from this book. I've been eagerly recommending it to anyone who I think would read it. In fact, if you want, drop me a line and I'll probably even buy you a copy -- seriously.

I'm thinking of writing a longform post about this book, so I'll try to keep this one short. Walkaway is fantastic, and has just about the best set of back-cover endorsements I've ever seen:

"The darker the hour, the better the moment for a rigorously imagined utopian fiction. Walkaway is now the best contemporary example I know of, its utopia glimpsed after fascinatingly extrapolated revolutionary struggle. A wonderful novel: everything we've come to expect from Cory Doctorow and more."

--William Gibson

"A dystopian future is in no way inevitable; Walkaway reminds us that the world we choose to build is the one we'll inhabit."

--Edward Snowden

"In a world full of easy dystopias, Cory Doctorow writes the hard utopia, and what do you know, his utopia is both more thought-provoking and more fun."

--Kim Stanley Robinson

"Cory Doctorow has authored the Bhagavad Gita of hacker/maker/burner/open source/git/gnu/wiki/99%/adjunct faculty/Anonymous/shareware/thingiverse/cypherpunk/LGTBQIA*/squatter/upcycling culture and zipped it down into a pretty damned tight techno-thriller with a lot of sex in it."

--Neal Stephenson

For a book like this, it's hard to imagine four better people to get blurbs from, and everything they say is spot-on. This is a great book, and it cannot have been easy to write. I've always thought that if Neal Stephenson had a more overtly political bent he'd sound a lot like Cory Doctorow (the correspondence is, of course, strongly complimentary for both parties), and this novel is strong evidence for that suspicion.

Walkaway is a novel of ideas, primarily concerned with social structures: what is possible, what is practical, and what is likely. Doctorow's creativity is striking, and he pulls no punches. This novel is optimistic and idealistic but by no means starry-eyed.

One interesting thing about the reviews quoted above is how three out of four make direct reference to this being a utopian novel. My suspicion is that this was not entirely by coincidence: if I were Cory Doctorow, I'd probably be concerned about being misinterpreted. In a sense, this novel is an exploration of ways in which utopia can emerge from dystopia, and it's easy to imagine how people would miss the former in a world where so much of what we read is the latter.

The basic premise -- skirting spoilers as much as possible -- is that modern society has grown almost intolerably late-capitalist, stratified between a worker class without even a hint of upward mobility, and an upper class, the "zottas" (an unimaginably large new SI prefix, one assumes, coined to describe the magnitude of their inflation- and greed-bloated wealth). The zottas live by a different set of rules, and from their perspective society is a kind of utopia -- at least, as long as they can keep from thinking too hard about it. This comes easily to most.

Meanwhile, for the worker class, all you have is wage-slavery in a world of ultra-pervasive surveillance. Most people lead lives of quiet irrelevance, barely getting by in a world that only cares about them inasmuch as it cares about their labor. The risk-takers throw "communist parties", breaking into unused warehouses and recommissioning abandoned manufacturing equipment, letting it spit out high-quality goods and giving them away, first-come-first-serve. Free as in beer -- and the beer's free, too.

The interesting thing about having automated fabrication of this quality is that it invalidates a core axiom of capitalism: that there's only so much nice stuff, and it has to get divvied up somehow, and an unfair system is better than no system at all. People in Walkaway's "default" society still live under this assumption, but a growing number come to realize the deck is stacked against them and, well, they walk away.

In walkaway, people share their fab machines gladly as long as you're not an asshole. Feedstock for them is abundant from salvage (left over from centuries of war and climate change, one assumes). Show up with feedstock and you're everyone's new best friend. With the labor cost of producing something you've got a design for reduced to virtually nil, the idea of having long-term possessions becomes sort of quaint, and the idea of purchasing things is flat-out incoherent. Why bother?

So while the people in "default" run ever-faster on their hamster wheels, the walkaways step off, rest their legs, and take a moment to look around for the first time. The book is about what they see, and how the rest of the world reacts to what they've done. It gets really dark in some places, but there's a utopian vision throughout that I found incredibly compelling. The world we choose to build is the one we'll inhabit.

Thursday, July 6, 2017

How to Make a Python Module Subscriptable

This might not be a good idea -- but it is a cool one.

Say you've got a Python dictionary with some data in it. There are two ways (well, two simple ways) to access its contents: via subscripting, or via a .get method.

d.get(k) defaults to None if a key is not found, whereas d[k] throws a KeyError. When the key is present, though, these two ways of getting data are more or less equivalent.

The same goes for nested dictionaries:

The particularly astute reader might already have sense of where this is going.

I'm writing a module to manage and expose a JSON config file to a Python app, and internally it represents the config using native Python data types. To the rest of the world, access is mediated via a .get call:

The idea is that other code, after import config, can call config.get and interface with the module similarly to how you'd deal with a plain dictionary. But here's the catch: the config data includes nested dictionaries. Accessing these would involve either chaining .get calls or mixing .get and subscript notation -- either of which would lead to ugly code.

What to do? There are a couple options here.

The simple, easy option would be to find a different way of exposing the config file.

The other option would be to find a way to make the entire module subscriptable, and to make subscripting it return the correct results.

I'm not really opposed to either option, but I know which one sounds more fun.

Internally, Python handles subscripting by calling an instance's __getitem__ method. But just defining a function named __getitem__ at module level won't work:

What's going wrong here? That's a simple question with a hard answer. The answer also varies somewhat depending on how far into the weeds of Python's object-oriented internals we want to wade. A few explanations, tuned to varying comfort levels:

Python doesn't make it easy for us to override 'magic' functions like __getitem__ at the module level, for that way madness lies.

Python wants __getitem__ to be a bound method, not just a function.

Specifically, __getitem__ must be bound to the object whose scope it's in (i.e. this module), something that there's no real pretty way of doing from inside the module body.

This last one explains why the following fails:

It's starting to look like our goal of making the module subscriptable would require some deep, dark wizardry, maybe even hooking on module import or something equally perverse. The good news is that we can contain the perversity to a reasonably small area. The solution is to have the module overwrite its own entry in the sys.modules registry, replacing itself with a tailor-made object possessing the desired bound method. Check it out:

And there you have it! We use __name__ to guarantee we're indexing into sys.modules at the right place. We pass the name of the module ("a") to the object constructor, which was inherited from types.ModuleType and expects a module name.

So there you have it: that's how to make a module subscriptable. Similar tricks would probably work for making a module e.g. callable, or really giving it any combination of properties implemented through __magic__ functions.

The more you know.

Say you've got a Python dictionary with some data in it. There are two ways (well, two simple ways) to access its contents: via subscripting, or via a .get method.

d = {'pears': 'good', 'peaches': 'meh', 'kumquats': 'yes please'}

d['kumquats'] # subscripting

d.get('pears') # .get

d.get(k) defaults to None if a key is not found, whereas d[k] throws a KeyError. When the key is present, though, these two ways of getting data are more or less equivalent.

The same goes for nested dictionaries:

d = {

'eggs': {

'breakfast': 'maybe',

'lunch': 'i guess',

'dinner': 'nah'

},

'sausage': {

'breakfast': 'deffo',

'lunch': 'yee',

'dinner': 'why not'

},

'sushi': {

'breakfast': 'wtf',

'lunch': 'erryday',

'dinner': 'sometimes'

}

}

d['eggs']['lunch'] # this works

d.get('sushi').get('breakfast') # so does this

d.get('sausage')['breakfast'] # this too -- but it's mega ugly

The particularly astute reader might already have sense of where this is going.

I'm writing a module to manage and expose a JSON config file to a Python app, and internally it represents the config using native Python data types. To the rest of the world, access is mediated via a .get call:

class Config:

... # load config file data, etc

def get(self, *args, **kwargs):

return self.config.get(*args, **kwargs)

# config query singleton -- prevents unnecessarily re-reading conf file

_config = Config()

get = _config.get

The idea is that other code, after import config, can call config.get and interface with the module similarly to how you'd deal with a plain dictionary. But here's the catch: the config data includes nested dictionaries. Accessing these would involve either chaining .get calls or mixing .get and subscript notation -- either of which would lead to ugly code.

What to do? There are a couple options here.

The simple, easy option would be to find a different way of exposing the config file.

The other option would be to find a way to make the entire module subscriptable, and to make subscripting it return the correct results.

I'm not really opposed to either option, but I know which one sounds more fun.

Internally, Python handles subscripting by calling an instance's __getitem__ method. But just defining a function named __getitem__ at module level won't work:

user@development:/tmp/tests$ cat a.py

import random

def __getitem__(self, key):

return random.choice(("o shit lol", "whaa", "no way", "lmfao"))

user@development:/tmp/tests$ cat b.py

import a

for _ in range(10):

print(a[_])

user@development:/tmp/tests$ python3 b.py

Traceback (most recent call last):

File "b.py", line 4, in <module>

print(a[_])

TypeError: 'module' object is not subscriptable

What's going wrong here? That's a simple question with a hard answer. The answer also varies somewhat depending on how far into the weeds of Python's object-oriented internals we want to wade. A few explanations, tuned to varying comfort levels:

Python doesn't make it easy for us to override 'magic' functions like __getitem__ at the module level, for that way madness lies.

Python wants __getitem__ to be a bound method, not just a function.

Specifically, __getitem__ must be bound to the object whose scope it's in (i.e. this module), something that there's no real pretty way of doing from inside the module body.

This last one explains why the following fails:

user@development:/tmp/tests$ cat a.py

import random

class Foo():

def __getitem__(self, key):

return random.choice(("o shit lol", "whaa", "no way", "lmfao"))

_foo = Foo()

__getitem__ = _foo.__getitem__

user@development:/tmp/tests$ cat b.py

import a

for _ in range(10):

print(a[_])

user@development:/tmp/tests$ python3 b.py

Traceback (most recent call last):

File "b.py", line 4, in <module>

print(a[_])

TypeError: 'module' object is not subscriptable

It's starting to look like our goal of making the module subscriptable would require some deep, dark wizardry, maybe even hooking on module import or something equally perverse. The good news is that we can contain the perversity to a reasonably small area. The solution is to have the module overwrite its own entry in the sys.modules registry, replacing itself with a tailor-made object possessing the desired bound method. Check it out:

user@development:/tmp/tests$ cat a.py

import random

import sys

import types

class Foo(types.ModuleType):

def __getitem__(self, key):

return random.choice(("o shit lol", "whaa", "no way", "lmfao"))

sys.modules[__name__] = Foo("a")

user@development:/tmp/tests$ cat b.py

import a

for _ in range(10):

print(a[_])

user@development:/tmp/tests$ python3 b.py

no way

lmfao

no way

o shit lol

no way

whaa

o shit lol

whaa

whaa

o shit lol

And there you have it! We use __name__ to guarantee we're indexing into sys.modules at the right place. We pass the name of the module ("a") to the object constructor, which was inherited from types.ModuleType and expects a module name.

So there you have it: that's how to make a module subscriptable. Similar tricks would probably work for making a module e.g. callable, or really giving it any combination of properties implemented through __magic__ functions.

The more you know.

Sunday, June 11, 2017

Resisting Man-in-the-Middle Attacks in P2P Networks

Previously:

Theseus: A Robust System for Preserving and Sharing Research

Resisting Sybil Attacks in Distributed Hash Tables

Securely Finding Friends via DHT Dead Drops

Distributed Search in Theseus

The State of Theseus, One Month In

Theseus Protocol v0.1 Overview

Bloom Filter Parameters for Distributed Search

Message Encryption in Theseus

In the previous post, Message Encryption in Theseus, I outlined how on top of robust encryption, Theseus can handle optional public-key authentication. This authentication gives us a way of defeating man-in-the-middle (MitM) attacks, but only if at least one of the communicating peers trusts a key possessed by the other. Since most interactions in peer-to-peer networks take place between total strangers, this limitation carries with it some unpleasant drawbacks.

One solution would be to have peers vouch for each other. For instance, when replying to a query with a list of peers, one could include not only the listed peers' contact info but also their public key fingerprints. Then when the querying peer connects to a new peer from this list, they could request the new peer's public key, check it against the provided fingerprint, authenticate using the key if it matches, or bail out or degrade their trust in the connection if the fingerprint and key don't match.

This seems like it would work, but at the cost of necessarily sharing public key identity information very promiscuously. If these are long-term identity keys we're talking about, then that's a big problem as far as anonymity, deniability, etc are concerned. As discussed in the previous post, it makes sense from a privacy angle to be reluctant to share long-term identity information with anyone you don't have a mutual trust relationship with.

That's no reason, though, why we couldn't add dedicated keys for this purpose. Since the term "ephemeral key" is already taken, let's call these, say, "node keys".

A peer could generate a node key immediately before first connecting to the network and have this key last only as long as their session does. They could share the key with anyone they connect to, but avoid explicitly associating it with their long-term cryptographic identity (e.g. by authenticating using both keys only with well-trusted remote peers, if ever). The only thing this key ever gets explicitly associated with is the peer's contact info. It would probably make sense to put an upper limit on the lifespan of such keys -- maybe six or twelve hours.

What is accomplished here? Man-in-the-middle attacks are made considerably more difficult and complex. What would it take to carry out a successful MitM attack with this system in place? That's a good and tricky question, the answer to which depends somewhat on the goal of the attack.

If the goal is to read and potentially alter all traffic sent to or from a specific peer, the MitM attack would have to intercept all encrypted traffic to or from that peer, swap out the keys used for all ephemeral ECDH handshakes, actively replace the advertised node key with an attacker-controlled key every time it is sent, alter the contents of all re-handshake negotiation messages to reflect the attacker-controlled key, and actively MitM those extra handshakes as well.

Such an attack is not impossible, of course, but it would require considerable resources, and if a connection can be established with even one trusted peer then the attack can be detected. The attacker could of course attempt to close the trusted peers' connection prior to their authentication to prevent this -- but that would likely seem suspicious in and of itself.

Note that the impact of malicious nodes lying about other nodes' node key fingerprints when returning contact info is minimal, since they don't really accomplish much that they couldn't've also accomplished by refusing to refer those nodes in the first place. Really, the worst case scenario is that the connecting node comes to believe that something malicious is taking place -- which, since they were just talking to a malicious node, is actually correct.

Friday, June 9, 2017

Message Encryption in Theseus

Previously:

Theseus: A Robust System for Preserving and Sharing Research

Resisting Sybil Attacks in Distributed Hash Tables

Securely Finding Friends via DHT Dead Drops

Distributed Search in Theseus

The State of Theseus, One Month In

Theseus Protocol v0.1 Overview

Bloom Filter Parameters for Distributed Search

Brief Summary

Theseus will use the Noise Protocol Framework.This will allow all network traffic to be encrypted, with flexible authentication and many other desirable properties as detailed below.

This post explicitly obsoletes the Theseus Protocol v0.1 Overview. That overview deliberately lacked specifics on message encryption. It was expected that said specifics would necessitate some changes, and this has indeed proven to be the case. A v0.2 specification is forthcoming.

Background

Prior to learning of the Noise protocol framework I was (with considerable trepidation) planning on designing a custom cryptographic protocol modeled closely on OTR and Signal but with modifications to fit our use case. Both of those protocols came close but ultimately fell just short of satisfying the rigorous and slightly unusual security parameters Theseus is aiming for.Desired properties for the encrypted channel established by this protocol include the standard AE fare, namely confidentiality, integrity, and authentication. On top of these properties, we desire forward secrecy and strong resistance to surveillance.

By strong resistance to surveillance, I mean all of the above plus several more properties: traffic analysis should yield little to no information, with message lengths and traffic patterns leaking minimal data and protocol traffic indistinguishable from randomness; relatedly, the protocol must be as hard as possible to fingerprint; and lastly, the cryptographic identities of the participants must be hidden from passive observers.

Efficiency, simplicity of design, and avoidance of bloat are also all high priorities. The last and perhaps most troublesome requirement is that authentication be optional, since parties may variously desire mutual, one-way, or no authentication depending on context. The protocol should be able to handle all three (or four, depending on how you count) of these cases straightforwardly and securely.

Startlingly few protocols even come close to meeting these criteria; virtually none meet all of them. Note in particular that cryptographic mainstays like TLS clearly fall short, as do standard message-based protocols like OTR and Signal (albeit by much smaller margins).

As I've mentioned before, the conventional wisdom concerning crypto algorithms and protocols is: whatever you do, don't roll your own. Unable to find any existing protocol meeting all our desired security properties, I was on the verge of breaking this advice and rolling a custom protocol when I discovered the Noise Protocol Framework, which describes something remarkably close to (though considerably better-designed than) what I had in mind -- something which effortlessly provides every property listed above and a few more for good measure. I'm amazed that it isn't more well-known. See the Github wiki's list of properties and protocol comparisons. Having now reviewed the specification in full, I really could not be happier or more impressed by the work that's clearly gone into it.

Noise is less a concrete protocol and more a framework for assembling protocols by mixing and matching stand-alone components in robust ways. What follows are some specifics on how Noise will be used in Theseus.

Handshake and Message Encryption

As mentioned before, a primary goal is to avoid any features that could lead to easy protocol fingerprinting. Negotiating handshake parameters (such as e.g. ciphers) in the clear would obviously violate this design principle. What we'll opt for here is to specify a default handshake parameterization to first establish an encrypted channel, and allow users to optionally re-negotiate a second handshake once communicating within this channel. This allows us to encrypt absolutely everything aside from an initial exchange of ephemeral public keys.

The default handshake will be an exchange only of ephemeral keys, i.e. the Noise_NN pattern. Future handshakes negotiated within this channel may be of the patterns Noise_NK, Noise_KN, or Noise_KK, with an optional extra PSK potentially determined during negotiation and applied via the psk0 modifier.

The default ciphersuite will be Noise448, which specifies ChaCha20/Poly1305 for AE and the conservatively-defined Ed448-Goldilocks curve for elliptic curve operations. We'll have the default hash function be SHA512.

Thus the protocol name for Theseus's default Noise protocol is:

Noise_NN_448_ChaChaPoly_SHA512

If peers wish to negotiate a second handshake, that handshake may be any of:

Noise_NK_448_ChaChaPoly_SHA512

Noise_KN_448_ChaChaPoly_SHA512

Noise_KK_448_ChaChaPoly_SHA512

Noise_NKpsk0_448_ChaChaPoly_SHA512

Noise_NKpsk0_448_ChaChaPoly_SHA512

Noise_KNpsk0_448_ChaChaPoly_SHA512

Noise_KKpsk0_448_ChaChaPoly_SHA512

Noise_KKpsk0_448_ChaChaPoly_SHA512

Later versions of the Theseus protocol may expand this list, in particular potentially expanding the list of permitted ciphersuites. The handshake re-negotiation is designed to gracefully support such extensions.

One last note: Noise specifies a max message size of 65535 bytes, with the handling of larger messages being considered an application concern. As such, Theseus will prepend total message length to all plaintexts as a 16-bit big-endian integer, as recommended in the framework specification.

One last note: Noise specifies a max message size of 65535 bytes, with the handling of larger messages being considered an application concern. As such, Theseus will prepend total message length to all plaintexts as a 16-bit big-endian integer, as recommended in the framework specification.

Handshake Re-Negotiation

Once an encrypted channel has been established using ephemeral keys, peers may optionally use this channel to negotiate authentication. Once they agree on parameters like a handshake protocol name and which public keys they wish to use in authentication, they may discard their current CipherState objects and, within the same TCP connection, start from scratch executing a new handshake using the previously agreed-upon keys (and, optionally, a similarly agreed-upon PSK).

The challenge for re-negotiation is that two peers must agree on three things:

One last note: padding should use cryptographically secure random number generation in order to avoid identifiable patterns in the output (which would undercut the entire point of including padding in the first place). If this random number generation is delegated to the OS, the designer should take deliberate steps to avoid depleting system entropy. This a situation would likely only be encountered in extremely high-traffic edge cases, if ever, but it's still worth mentioning since entropy exhaustion can catastrophically undermine the security of ephemeral key generation. This issue varies so much by OS, though, that I think that's all I'll say on it for now.

The challenge for re-negotiation is that two peers must agree on three things:

- What sort of authentication to use

- That is, whether authentication should be one-way or mutual

- Or in other words, whether to handshake with Noise_NK, Noise_KN, or Noise_KK.

- What public keys to use in the handshake

- Whether to include a PSK, and if so, what its value should be.

The difficulty is that some peers may understandably be reluctant to advertise to a total stranger the list of all public keys by which they are willing to authenticate. After all, doing so could have obvious and severe ramifications where anonymity is concerned. As such, a peer may be willing to authenticate using a given key if and only if the remote peer demonstrates prior knowledge of this key, and/or if the remote peer is also willing to authenticate using a specific key of their own.

Note that such a model would not completely obviate the abovementioned privacy concern -- what it does is reduce the issue from peers promiscuously advertising identity keys to those peers exposing what is essentially an oracle by which an attacker could test whether the remote peer is willing to authenticate using a single given key.

I see this as a minor concern, as abuse of the oracle should be very easy to detect, but it is still worth pointing out as both an informational note and an acknowledgement that there is perhaps room for further improvement here.

We'll define two protocol messages which together allow for re-negotiation without unnecessarily promiscuous disclosure of information: handshake_suggest and handshake_request.

Messages of the former type are purely informational and may be exchanged any number of times. Their purpose is to communicate re-handshake parameters that the sending party would find acceptable.

A message of the latter type specifies concrete re-handshake parameters. If the remote peer finds these parameters unacceptable, it may reply with an error code. A non-error response indicates that the remote peer accepts the re-handshake parameters.

As soon as acceptance is indicated, both peers should discard their existing protocol state, remembering only the agreed-upon parameters for the new handshake, and the new handshake should then be carried out, with the party who first sent the handshake_request message serving as the handshake initiator.

We'll defer on providing a precise specification of the parameters of handshake_suggest and handshake_request pending the v0.2 spec draft.

Miscellaneous Considerations

Public Key Disclosure

As discussed above, some parties may be reluctant to disclose their public keys to an unauthenticated third party. If both peers harbor this concern, a curious impasse is reached. This is a hard problem to crack, with a number of seemingly obvious solutions harboring non-obvious but fatal flaws. The idea of an approach involving zero-knowledge proofs seems promising but threatens to introduce to the protocol worrying levels of complexity for marginal benefit. This might be an interesting problem for a particularly sharp and motivated contributor to spend some time mulling over.

In-Order Message Delivery

Just as an aside, it's worth noting that the vanilla Noise framework works best in environments guaranteeing in-order delivery of messages. Out-of-order delivery could lead to legitimate messages being decrypted with the wrong key material, causing them to appear invalid. Other protocols such as Signal and OTR face a similar problem and solve it by various means such as order-specifying message headers. These approaches solve the problem but also introduce trivially-recordable metadata and make the protocol significantly easier to fingerprint -- both undesirable properties.

Applications using Noise in spaces where in-order delivery is not guaranteed typically seem to solve the problem by precomputing a certain number of keys and thus maintaining a sliding "window" within which they can gracefully handle out-of-order delivery, i.e. if you precompute five keys ahead then you can handle out-of-order delivery as long as the delivery order doesn't place any message more than five notches away from its spot in the intended message order.

I mention all this purely for informational purposes, as the Theseus control protocol -- the component of the system using Noise -- will operate over TCP, which guarantees in-order message delivery.

Man-In-The-Middle Attacks

Authentication is necessary whenever possible in order to defeat man-in-the-middle attacks, which can otherwise straightforwardly bypass Diffie-Hellman of both the traditional and elliptic-curve varieties. The initial handshake, in which only ephemeral keys are exchanged and therefore no cryptographic statements of identity are made by either party, is thus vulnerable to man-in-the-middle compromise by an active attacker. There isn't really any way around this, since we need to support connections between complete strangers (who obviously won't know or trust each other's public keys, and thus have no easy way of authenticating to one another).

Note that if either party authenticates to the other, the success of this authentication would necessarily indicate the absence of a man-in-the-middle attack. Note further that if an active MitM attacker were to interfere with the re-handshake negotiation, the most harm they could do would be to either cause the second handshake to fail or else prevent it from happening at all.

Of course, if an attacker is in control of a public key well-trusted by a given peer, then naturally the attacker can impersonate the identity associated with this key to this peer and carry out a man-in-the-middle attack in this way. Dealing with such a threat is well beyond the scope of this post (and if you have any ideas on how to do it, I'd gladly buy you a beer...)

Note that the authentication model described above makes no mention of public key roles, leaving itself open to supporting basically any trust model a given client may wish to adopt. This is intentional, for flexibility's sake. Consider it a form of future-proofing. I do have some ideas on a reasonable baseline method of managing public-key identities to get some of their benefits without needlessly compromising anonymity; this'll likely be the subject of my next post.

Note that if either party authenticates to the other, the success of this authentication would necessarily indicate the absence of a man-in-the-middle attack. Note further that if an active MitM attacker were to interfere with the re-handshake negotiation, the most harm they could do would be to either cause the second handshake to fail or else prevent it from happening at all.

Of course, if an attacker is in control of a public key well-trusted by a given peer, then naturally the attacker can impersonate the identity associated with this key to this peer and carry out a man-in-the-middle attack in this way. Dealing with such a threat is well beyond the scope of this post (and if you have any ideas on how to do it, I'd gladly buy you a beer...)

Note that the authentication model described above makes no mention of public key roles, leaving itself open to supporting basically any trust model a given client may wish to adopt. This is intentional, for flexibility's sake. Consider it a form of future-proofing. I do have some ideas on a reasonable baseline method of managing public-key identities to get some of their benefits without needlessly compromising anonymity; this'll likely be the subject of my next post.

Explicit Connection-Ending

The Noise protocol specification suggests that an explicit way of indicating end-of-connection be included at the application level, to give applications a way of distinguishing between benign disconnects and attacker interference. This is a good idea which will be included in the v0.2 Theseus spec draft.

Implementation

The intent is to implement Theseus in Python 3. It is therefore worth mentioning Python 3 does not yet have a library for Noise, though there is an ongoing project to implement one though bindings to the C reference implementation via ctypes. The library appears to be under active development, with Python 2.7 support available and Python 3 support in progress.

Protocol Fingerprinting & Info Leaks

One fantastic property of the Noise protocol is its resistance to fingerprinting. True to its name, virtually all protocol traffic is indistinguishable from random noise, although this varies somewhat depending on context: for instance, handshakes that lead with long-lived public identity keys provide identifiable data to a knowledgable adversary, and public keys either ephemeral or long-lived can be identified as such with reasonable confidence unless they are cleverly encoded using a scheme such as Elligator. These schemes tend to come with complications of their own, but that's a story for another time.

Note that even if message contents are indistinguishable from random noise, message sizes and traffic patterns still convey some data to the attacker. These are harder problems to solve.

Reducing the information conveyed by traffic patterns is a protocol design problem more than an encryption problem. The v0.1 protocol overview discussed this matter at some length, and the (relatively promising) ideas described therein are absolutely going to be carried forward in v0.2 and hopefully beyond.

The amount of information conveyed by message size can be reduced by padding our messages with random noise. This is discussed in brief under the Noise specification's section on "Application Responsibilities". One thing worth noting is that the Noise protocol specifies a maximum message size. This allows extremely security-focused users to pad all their messages to said size, thus eliminating all variation in message size. This size limit also means that messages larger than the size limit must be broken up. So even with an aggressive padding scheme, some size data will necessarily leak in the case of very large messages.

On this subject, the Noise framework specification says:

Length fields: Applications must handle any framing or additional length fields for Noise messages, considering that a Noise message may be up to 65535 bytes in length. If an explicit length field is needed, applications are recommended to add a 16-bit big-endian length field prior to each message.65535 bytes is large enough that message fragmentation doesn't seem likely to cause problems in practice. It may come up in some situations e.g. when returning lots of search results or Bloom filters, but this is probably fine.

One last note: padding should use cryptographically secure random number generation in order to avoid identifiable patterns in the output (which would undercut the entire point of including padding in the first place). If this random number generation is delegated to the OS, the designer should take deliberate steps to avoid depleting system entropy. This a situation would likely only be encountered in extremely high-traffic edge cases, if ever, but it's still worth mentioning since entropy exhaustion can catastrophically undermine the security of ephemeral key generation. This issue varies so much by OS, though, that I think that's all I'll say on it for now.

Monday, May 8, 2017

Bloom Filter Parameters for Distributed Search

Previously:

Theseus: A Robust System for Preserving and Sharing Research

Resisting Sybil Attacks in Distributed Hash Tables

Securely Finding Friends via DHT Dead Drops

Distributed Search in Theseus

The State of Theseus, One Month In

Theseus Protocol v0.1 Overview

Bloom filters are central to both the routing and content-indexing functions of Theseus's distributed search algorithm. A filter's false-positive probability increases monotonically as a function of the number of keys in the filter, as well as on two pre-set parameters: the filter size and the number of hash functions used. The expected number of bits set to 1 in the filter also increases monotonically as a function of n. Derivations of the equations governing these values can be found in section II.A of this paper, which also provides a treatment of the idea of compressing Bloom filters in certain situations, e.g. for network transfer or bulk storage. The question is asked of whether a benefit can be obtained from this compression, and the answer is that it of course depends on the sparsity of the filter.

The strategies suggested by that paper for parameterizing a Bloom filter with compression in mind involve significantly increasing the filter's uncompressed size in memory. This is a fairly unattractive cost.

"Correctly" optimizing a Bloom filter's parameters requires having a good estimate of the number of elements the filter will contain. If this value is expected to vary widely, as it likely would in Theseus, then such an optimization can't really be performed in any particularly rigorous way.

The cost of underestimating the number of elements is that your filter's false positive rate will be untenably high. The cost of overestimating is that you'll end up with a sparse, inefficient filter.

I observed in Resisting Sybil Attacks in Distributed Hash Tables that having honest peers operate multiple DHT nodes would help increase their resilience to horizontal Sybil attacks. Another benefit to operating multiple DHT nodes is that one could spread one's content library across them and have each node index only its own subset of the total library. So in the case of Theseus, if a peer has more data to share than they can fit in a single Bloom filter while maintaining an acceptable false-positive rate, they can simply partition the data to optimally-sized subsets & in this way sidestep the problem. Thus for Theseus the cost of underestimating the number of elements is actually very low.

The cost of overestimating the number of elements is also fairly low: sparse filters, as we just discussed, are amenable to compression. Thus we can compress them before transmission to keep from wasting bandwidth -- and if we're tight on working memory, we can compress them at rest as well. Making compression optional allows us to do all this without impacting performance for saturated filters.

So really, as long as our estimate isn't wildly off-base, the system should be able to gracefully handle outliers on either extreme. This means that there is in fact a wide range of parameterizations "good enough" for our purposes. How do we choose one over the others? Lacking a better motivator, we might as well make the choice based on convenience of implementation.

What's convenient? Well, having the filter size be a power of 2 would be convenient, because it'd make it easier to get indices from a cryptographic hash function. Having the 2's exponent be a multiple or 4 would also be quite nice, to make the hex constant for the bitmask more readable. Say 2¹⁶ bits, then. The next question is what we want the capacity of the filter to be -- or, equivalently, how many bits per element we want to allocate.

If we choose, say, 10 bits per element, our filter would cap out at 2¹⁶ / 10 ≈ 6554 elements. The optimal number of hash functions would be ln(2) * 10 = 6.93... ≈ 7, and the false positive rate at saturation would be (1-e^(-7/10))^7 = 0.0081... ≈ an 0.8% chance of a false positive.

6554 seems a little small, though -- especially considering that in all likelihood any given file in the system would have at least several unique filter elements associated with it. What if we try fewer bits per element?

Going down to 7 bits per element raises our cap to 2¹⁶ / 7 ≈ 9362 elements. ln(2) * 7 = 4.8... ≈ 5. The false positive chance increases to about 3.5%. These figures sound reasonable enough to me, so let's say our provisional Bloom filter parameters are 2¹⁶ bits (= 2¹³ bytes) and 5 hash functions.